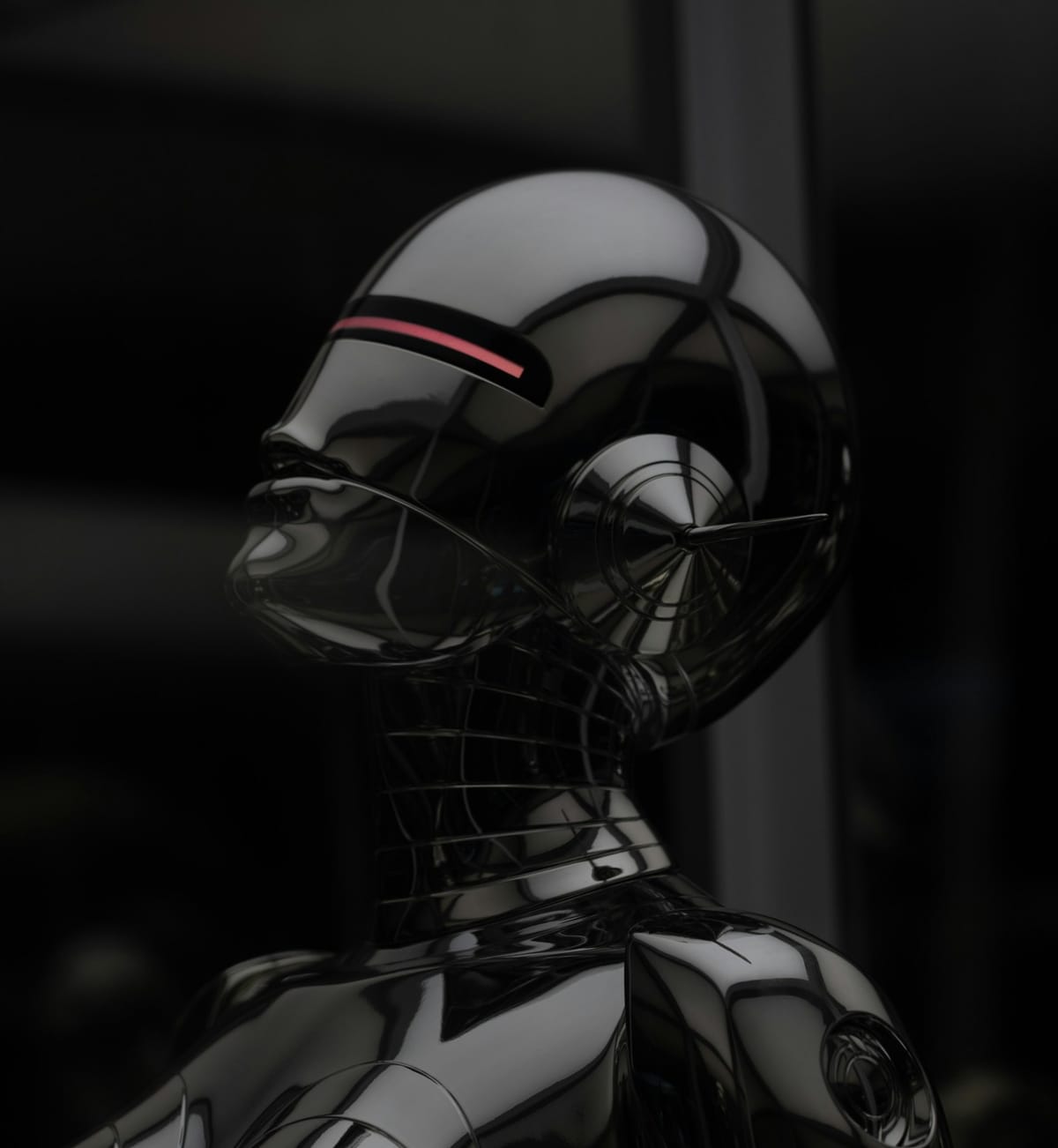

Frankenmodels: Are We Entering the Era of Hybrid AI Engines Built from Multiple Model Minds?

A new breed of hybrid AI systems is emerging — built from multiple model minds. Are we ready for the risks and rewards?

What happens when one AI model isn’t enough?

As artificial intelligence systems grow more specialized and complex, a new design trend is gaining traction: hybrid AI architectures that stitch together multiple models — each with distinct strengths — to function as one cohesive intelligence.

Some call them composite agents. Others call them modular stacks. But a growing number of researchers are using a more provocative term: Frankenmodels — AI engines built from many “minds,” fused together for speed, flexibility, and capability.

It’s not science fiction. It’s the future of scalable, smarter AI.

Why Frankenmodels Are Emerging Now

Single, monolithic models — like GPT-4 or Claude — are powerful, but also bloated. They require immense compute, massive data, and complex alignment.

Meanwhile, domain-specific models are becoming more efficient and accurate in narrow tasks — coding, math, vision, retrieval, reasoning. Frankenmodels aim to combine these:

- A language model for dialogue

- A vision model for perception

- A search engine for real-time knowledge

- A logic model for reasoning

- A safety layer for filtering

Together, they create an AI ensemble — each part optimized for its purpose, communicating in real time.

This modular approach powers systems like OpenAI’s GPT-4 with tools, Anthropic’s Claude with memory, and emerging agentic frameworks like AutoGPT and MetaGPT.

Benefits: Smarter, Safer, More Adaptable AI

By blending models, developers can:

- Reduce costs by using smaller, specialized components

- Improve safety with dedicated moderation and alignment modules

- Boost performance in complex tasks that require multiple forms of intelligence

- Update parts of the stack independently, like replacing a search module without retraining the whole system

Frankenmodels also allow for dynamic control — giving users or agents the ability to orchestrate workflows across multiple AIs on demand.

The Risks: Black Boxes Inside Black Boxes

But there’s a dark side. As we stack models atop each other:

- Interpretability worsens — it’s hard to know which model did what

- Debugging becomes harder, especially when outputs conflict

- Accountability gets murky — who’s responsible when the system goes wrong?

And most dangerously: Frankenmodels may inadvertently combine flaws from each module, leading to unexpected emergent behavior.

As DeepMind researcher Pushmeet Kohli warned:

“Hybrid systems can outperform — but also overreact in ways no one model would alone.”

Conclusion: The Age of AI Assemblies Has Arrived

We’re entering a new phase of AI evolution — from monolithic minds to modular collectives. Frankenmodels are not a flaw. They’re a feature of AI’s next frontier: complex, coordinated intelligence.

The key challenge? Building architectures that are not just more capable — but more transparent, testable, and trustworthy.

Because stitching minds together is easy. Aligning them is where the real work begins.

✅ Actionable Takeaways:

- Explore modular tools like LangChain, AutoGPT, or Toolformer to build Frankenmodel-style workflows

- Focus on interface-level transparency when integrating multiple models

- Monitor output chains carefully to avoid silent failures in hybrid systems