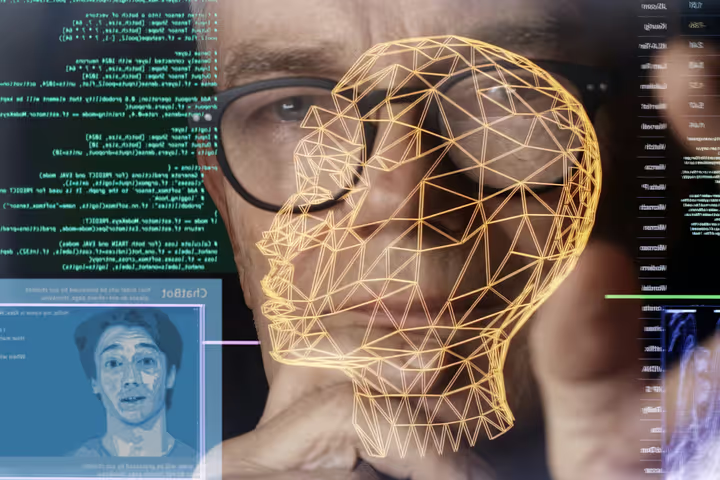

Inside the AI Audit Trail: Tracking How Machines Think

Explore how AI audit trails are redefining transparency and gain insights into how algorithms think, decide, and evolve, making machine reasoning accountable and traceable.

In the world of artificial intelligence, understanding why a model makes a decision is becoming as important as the decision itself. The AI audit trail is an emerging framework for transparency; a record of how an algorithm learned, reasoned, and acted.

It’s the difference between a black box and a system that can explain its own thinking.

Building a Memory of Decisions

Every time an AI processes data, it creates a cascade of choices: which variables to prioritize, which weights to adjust, which outcomes to rank highest.

Until recently, these internal steps were invisible. Now, companies like Arthur AI, Fiddler, and Truera are developing platforms that automatically log these decision chains, creating forensic-grade memory maps of model behavior.

Why Explainability Matters

AI systems now influence everything from healthcare diagnostics to credit approvals. But without traceability, accountability falters.

When a loan is denied or a diagnosis flagged, the audit trail shows how the model reached that conclusion, identifying whether bias, error, or drift crept into the process. It’s not about humanizing machines; it’s about human-scale responsibility.

Turning Opacity into Evidence

Modern audit frameworks merge monitoring with interpretability. Fiddler AI’s Model Performance Management, for instance, visualizes latent decision logic, that is, the internal narrative of a model’s reasoning.

It allows engineers and regulators to interrogate not just what a system decided, but why it preferred one outcome over another.

Regulation as Catalyst

Global policy is catching up. The EU AI Act, NIST’s AI Risk Framework, and similar laws demand explainability and trace logs for high-stakes models.

These legal standards are turning the AI audit trail from a technical option into an ethical necessity.

The Ethics of Visibility

But transparency isn’t enough. If audit trails expose sensitive data or proprietary algorithms, they could introduce new vulnerabilities. Hence, researchers are now developing selective transparency or systems that reveal reasoning to regulators without leaking critical design secrets. The goal is balance: insight without intrusion.

From Audit to Understanding

In the near future, AI systems may not just keep logs, they may narrate their reasoning. Imagine models that generate real-time, plain-language explanations of their choices.

Such tools would bridge trust between human judgment and machine cognition, replacing opacity with collaboration.

The Accountable Algorithm

The audit trail marks a deeper cultural shift. It acknowledges that intelligence, even if synthetic, must coexist with ethics, traceability, and consent.

In tracing the mind of the machine, we’re really learning to see our own values reflected back.