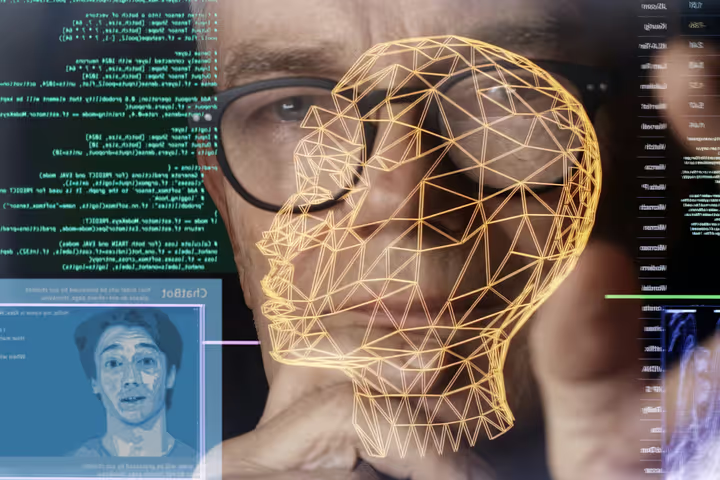

Model Mirage: When Open-Source AIs Look Transparent But Learn in Shadows

Open-source AIs promise transparency—but are they as open as they seem? Dive into the hidden shadows of today’s most "transparent" models.

They wear the badge of openness, but what do they really reveal?

In the age of democratized AI, open-source models like Meta’s LLaMA, Mistral, and Falcon promise accessibility, community oversight, and transparency. But behind the public weights and GitHub repositories lies a growing paradox: these models may appear open, but their learning process is increasingly opaque.

This is the Model Mirage—where transparency becomes more branding than reality.

The Promise of Openness

Open-source AI models have long been seen as the ethical alternative to proprietary black boxes. They enable:

- Public audits and reproducibility

- Faster academic and developer experimentation

- Lower barriers for innovation in underfunded regions and sectors

- Community-driven safety tools and fine-tuning techniques

The AI community has celebrated this movement as a pushback against Big Tech dominance.

But there’s a catch.

What’s Really Open?

Most so-called "open" models only release the final trained weights, with little insight into:

- The full pretraining dataset

- The actual pretraining process and compute scale

- The filtering methods or data redactions used

- Reinforcement learning feedback loops or alignment tweaks

- Internal safety guardrails or model patching updates

This creates a false sense of visibility. Developers can use the tool but can’t always trace what shaped it. Worse, many large open-source models are trained on data from closed platforms, under vague licenses, or through methods that would fail basic academic reproducibility standards.

Why the Shadows Matter

Transparency isn’t just about ideals—it impacts real-world trust and risk.

- If we don’t know what a model saw, we can’t anticipate where it might hallucinate

- If we don’t know how alignment was applied, we can’t predict ethical behavior boundaries

- If we can’t trace its evolution, malicious fine-tuning or misuse becomes harder to detect

This is especially critical in sectors like healthcare, finance, or law, where “explainability” isn’t optional—it’s legally required.

The Mirage Effect

Some AI labs label their models as “open” while maintaining closed-door practices in:

- Pretraining recipe secrecy

- Strategic dataset redaction

- Opaque licensing (e.g., “open for research use only”)

- Proprietary alignment frameworks built atop open cores

In essence, we’re entering an era of open-surface, closed-core AI. The shell is shared. The soul is not.

🔚 Conclusion: Transparency Needs More Than Source Code

The open-source label can’t become a marketing trick. True openness means clarity in how a model was made, not just what it outputs.

If the industry doesn’t standardize open AI documentation, lineage tracing, and governance norms, we risk trusting systems we don’t truly understand—again.

Because in a world of model mirages, transparency without traceability is just another illusion.