OpenAI Unveils Tools to Detect AI-Generated Videos

OpenAI develops tools to detect AI-generated videos. Explore how this tech works, why it matters, and what it means for misinformation and media integrity.

Can you trust what you see online anymore?

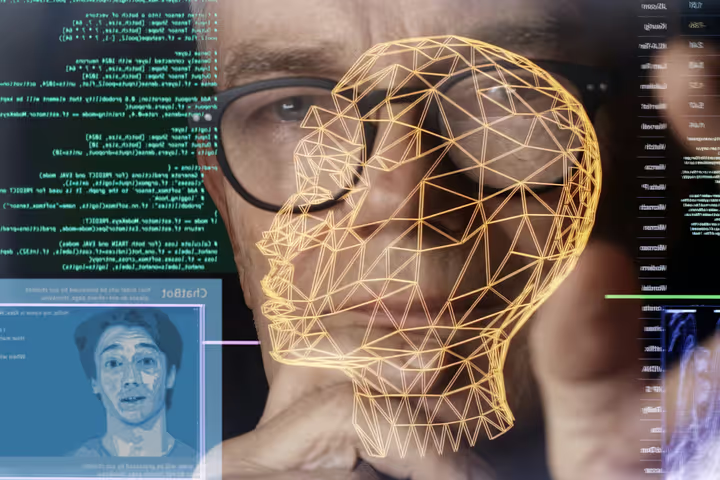

With AI-generated videos becoming eerily realistic, OpenAI is stepping up. The company has unveiled early tools aimed at detecting synthetic videos—a move that could redefine the battle against misinformation, deepfakes, and digital forgery.

As the lines between real and fake blur, OpenAI’s efforts are a crucial piece of the puzzle in maintaining public trust in visual media.

Why AI-Generated Video Detection Is Urgent

The rise of tools like Sora, Runway, and Pika Labs has made it easier than ever to create hyperrealistic video content using simple text prompts. While the creative potential is immense, so are the risks.

From election interference to celebrity hoaxes and financial scams, deepfake videos are rapidly becoming a serious threat to online integrity. According to a 2024 report by Europol, up to 90% of online content could be AI-generated by 2026.

OpenAI’s new detection tools aim to stay ahead of this curve—by spotting subtle digital signatures left behind by AI-generated visuals.

How OpenAI's Detection System Works

OpenAI is using a mix of machine learning models, watermarking, and metadata analysis to identify AI-generated videos. These tools analyze pixel-level patterns, motion inconsistencies, and model-specific artifacts that are hard for humans to detect.

Importantly, the detection is designed to be scalable and integrable, meaning it can be embedded into platforms like social media sites, video hosting services, and newsrooms.

This aligns with OpenAI’s broader safety agenda, which includes building “verifiers” for its generative models and working with policymakers on responsible AI governance.

Balancing Innovation and Accountability

While detection is necessary, it raises complex questions about privacy, free speech, and platform responsibility. Should all AI-generated content be labeled? Who controls the verification pipelines? What happens when the detection tech itself is gamed?

OpenAI acknowledges these challenges and is calling for a multi-stakeholder approach—involving governments, researchers, civil society, and other AI developers—to create common standards and avoid a patchwork of incompatible tools.

This echoes initiatives from the Partnership on AI and the Coalition for Content Provenance and Authenticity (C2PA), which are pushing for interoperable solutions across platforms.

What This Means for the Future of AI and Media

The development of AI-generated video detection by OpenAI is a step toward restoring credibility in digital content. As generative video becomes mainstream, detection and labeling will likely become baseline requirements for any responsible media ecosystem.

For creators, it’s a reminder to use generative tools transparently. For platforms, it's a signal to start integrating detection tech now. And for consumers, it's a prompt to stay skeptical and informed in a world where seeing is no longer believing.