Prompt Collapse: When AI Models Forget How to Think in the Real World

Today’s AI sounds smart—but is it losing touch with reality? Discover the risks of prompt collapse and how AI is drifting from real-world logic.

Ask an AI to summarize a research paper, and it might give you flawless prose. Ask it to explain what that research means in a real-world context, and you might get baffling nonsense—or worse, confident hallucinations.

This isn’t a glitch. It’s a symptom of what experts are now calling Prompt Collapse: the moment when AI models become so optimized for prompts, patterns, and token prediction that they begin losing grip on grounded reasoning.

What Is Prompt Collapse?

Prompt collapse happens when large language models (LLMs) begin to overfit to language tasks while failing to retain real-world reasoning, logic, or factual grounding. In simpler terms: they’ve mastered the art of responding—but lost the plot of why they’re responding.

It’s the AI version of a student who aces the test by memorizing patterns, not understanding concepts.

And in a world increasingly reliant on LLMs for decision-making, education, hiring, research, and even law, that’s not just ironic. It’s dangerous.

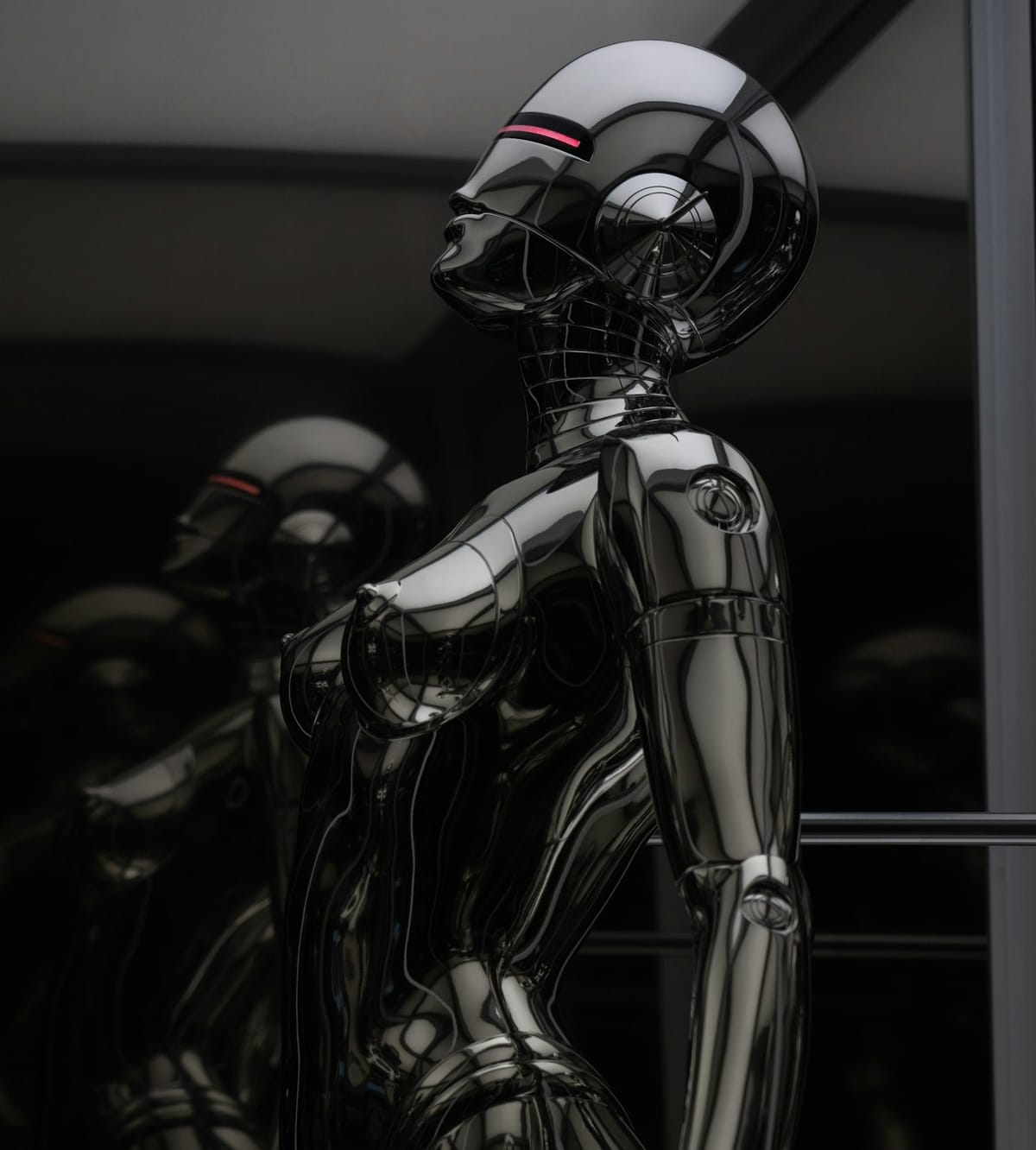

How AI Became a Mirror, Not a Mind

The secret behind today’s most powerful AIs—like GPT-4 or Claude—is predictive processing. These models don’t “know” facts; they predict the most likely next word, sentence, or action based on patterns in data.

This works brilliantly for tasks like:

- Writing emails

- Translating language

- Generating summaries

But when asked to reason about the world—to understand causality, intent, or physical constraints—they falter. Why? Because their training isn't rooted in reality. It's rooted in text.

Over time, as models train more on AI-generated data (a phenomenon known as model cannibalism), they risk becoming echo chambers of optimization—less connected to reality, more tuned to artificial cues.

The Real-World Risks of Prompt Collapse

The implications are far from academic.

- In healthcare: A model might recommend treatment paths based on flawed analogies, not clinical accuracy.

- In law: AI-generated summaries may misrepresent legal arguments with confident-sounding nonsense.

- In science: Hallucinated citations or false causal links could derail research synthesis.

- In journalism: Auto-written stories may echo biases or present fictional “facts” as verified claims.

Prompt collapse isn't about AI breaking. It’s about AI appearing smarter while actually growing dumber.

Can We Ground AI Again?

Researchers are exploring ways to bring AI “back to Earth”:

- Hybrid models that combine symbolic reasoning with deep learning

- Retraining on real-world tasks with consequences and feedback loops

- Reinforcement from human feedback emphasizing truth over fluency

- Data provenance tracking to reduce reliance on synthetic content

But the deeper question remains: Are we building tools that understand, or tools that imitate?

Conclusion: When Performance Hides a Collapse of Meaning

Prompt collapse reminds us that sounding intelligent is not the same as being intelligent.

As we rely more on LLMs to think, decide, and create, we must demand models that are not just articulate—but grounded. Because if AI forgets how to think in the real world, it won’t matter how well it writes.