Promptnesia: When AI Forgets How to Think Without You

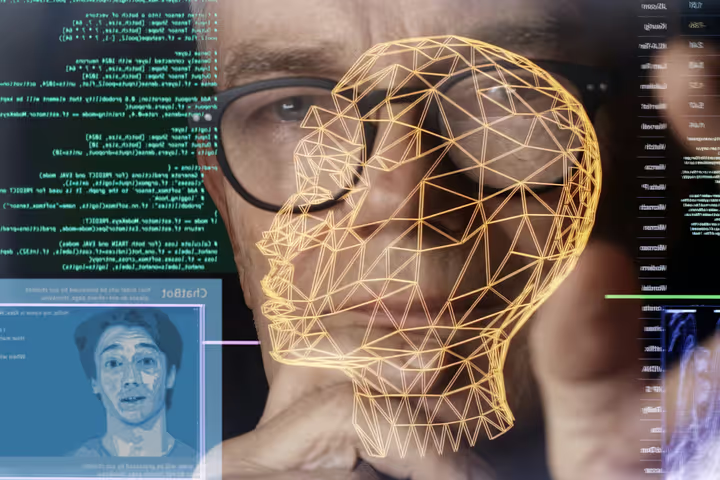

Has AI become too dependent on prompts to function? Explore how intelligent systems lose initiative when they can’t act without human cues.

As generative AI becomes the default interface for productivity, creativity, and automation, a strange dependency is forming—one that reveals a deep limitation: AI often doesn’t know what to do unless we tell it.

This isn’t just about prompting ChatGPT to write an email or Midjourney to generate a fantasy landscape. It’s about a growing truth behind the scenes of the AI boom:

We’ve built machines that appear smart, but only in response to us.

Without prompts, they don’t reason.

Without instructions, they don’t initiate.

Without humans, they freeze.

Call it Promptnesia—the inability of AI to think without being told how.

The Illusion of Autonomy

Modern AI can complete Shakespearean sonnets, write Python scripts, or draft legal memos. But not on its own.

Under the hood, these systems don’t start with goals. They start with your words. The intelligence we see is reactive, not proactive.

Even agentic AI—like AutoGPT or Devin—requires detailed scaffolding, goal descriptions, and environment feedback. Without a framework, it loops, stalls, or hallucinates.

We call it “intelligent,” but intelligence usually implies initiative. AI, for now, lacks that spark.

Prompt Dependency = Human Bottleneck

This dependency creates a paradox: as AI scales, so does the demand for good prompting. We are the bottleneck.

Enterprises are hiring "prompt engineers." Schools are teaching prompt crafting. Whole products are being redesigned around how humans talk to machines—because the machines won’t move until we speak.

This raises a concern for the future: if AI truly augments us, shouldn’t it be able to anticipate needs, recognize patterns, and act without waiting for a command?

Why Promptnesia Matters

Promptnesia reveals a critical weakness in today's AI systems:

- They can’t form original intentions

- They don’t understand context unless spelled out

- They’re reactive in design, not reflective

This limits autonomous agents, real-time collaboration, and any hope for AI that can co-create or co-pilot alongside humans in truly dynamic environments.

It also risks creating over-reliance: we prompt, they respond. But what happens when we need systems that initiate?

Conclusion: Beyond Prompt-Based Thinking

To move beyond Promptnesia, AI must evolve toward contextual cognition—where systems can infer, adapt, and act with minimal human cueing.

Until then, every “intelligent” response is still a reflection of the prompter—not the machine.

We’ve built powerful engines. But without the key in the ignition, they idle silently.