Synthetic Conscience: When Morality Becomes a UX Feature

When ethics becomes adjustable, is AI still accountable? Explore how morality is turning into a customizable feature—not a foundational principle.

As AI systems increasingly influence decisions once governed by human judgment—content moderation, criminal sentencing, hiring, even military targeting—there’s a new trend emerging: morality as a setting. Not a principle. Not a philosophy. A feature.

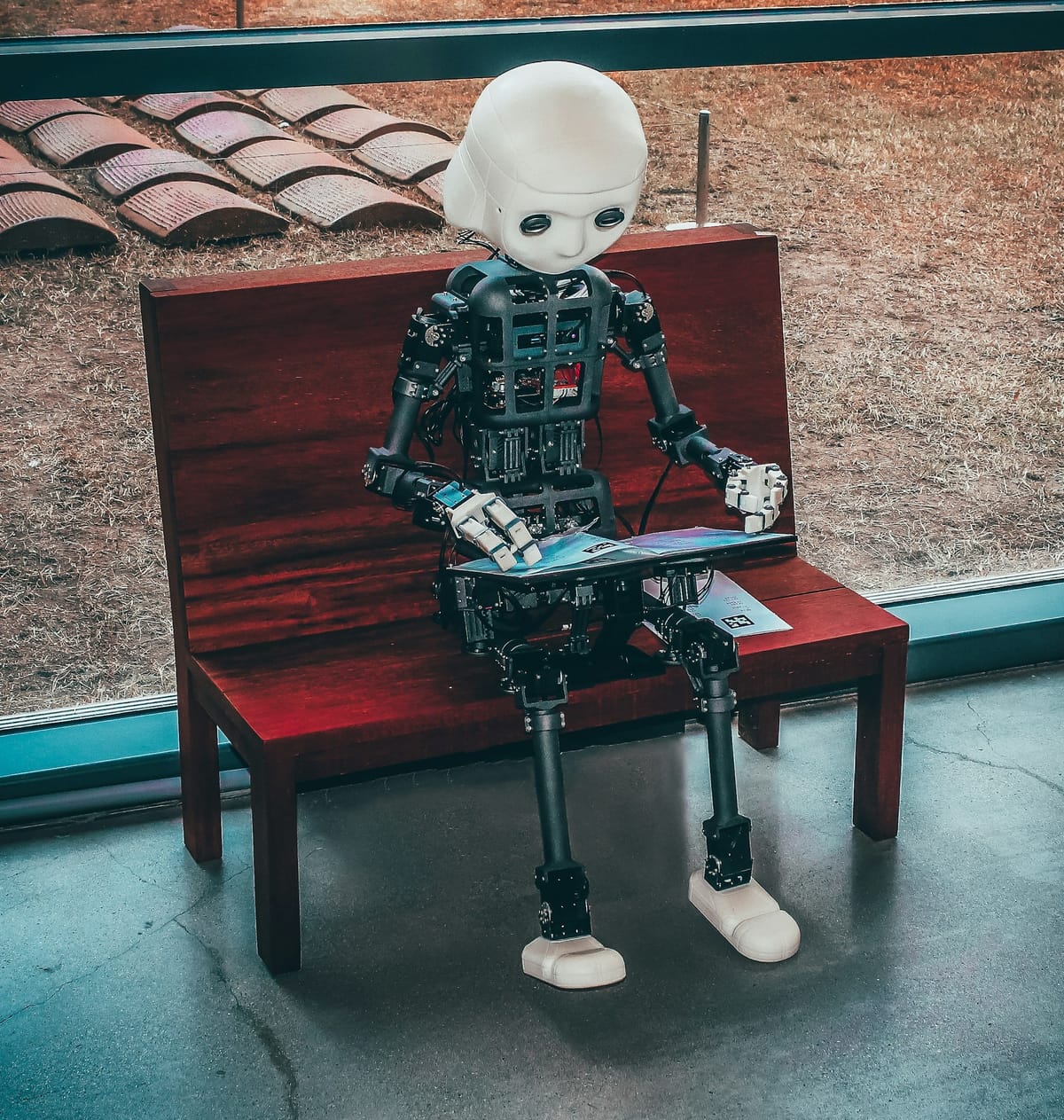

Call it a synthetic conscience—a layer of ethical behavior that can be turned on, tuned down, or tailored to user preference.

In a world where “dark mode” and “safe mode” live side by side, morality is now an interface decision. And that should terrify us.

From Built-In Values to Optional Filters

Early AI developers dreamed of aligning machines with universal human values. But consensus proved elusive. So instead of building ethics in, we started bolting them on.

Now, many AI products let users:

- Adjust content sensitivity

- Enable or disable fairness filters

- Opt into “responsible mode” or “uncensored mode”

- Choose political or religious bias settings in chatbots

It’s not just what the AI says—it’s how you want it to behave.

The result? A fractured ecosystem of moral relativity, where the same model can sound like Gandhi or Machiavelli, depending on the user interface.

When Ethics Becomes Preference

Let’s be clear: customization isn’t always bad. Cultural nuance, accessibility, and inclusion often require adaptability.

But when core ethical boundaries become opt-in, we cross into dangerous territory.

Do you want your AI assistant to lie for you?

Should it prioritize profits over people in customer service decisions?

Can it spread misinformation if the user asks nicely?

The line between personalization and permission starts to blur—especially when profit incentives reward engagement over ethics.

Who Designs the Default Conscience?

Every “ethical setting” has a default. And behind that default is a designer—a team, a company, a goal.

Should OpenAI, Google, Meta, or startups decide what’s moral?

Should governments legislate ethics modes?

Should users vote on right vs. wrong?

The danger of synthetic conscience is that it simulates ethics without resolving them. It looks responsible, but it’s customizable. And that means accountability can evaporate as quickly as a settings menu.

Conclusion: Don't Let Ethics Become a Checkbox

Morality isn’t a UI feature. It’s not a toggle, a filter, or a mode. When we reduce conscience to a customizable setting, we don’t make AI more ethical—we make ethics optional.

Real ethical design means building values into the foundation, not the preferences pane. Because if conscience becomes synthetic, then so do consequences.