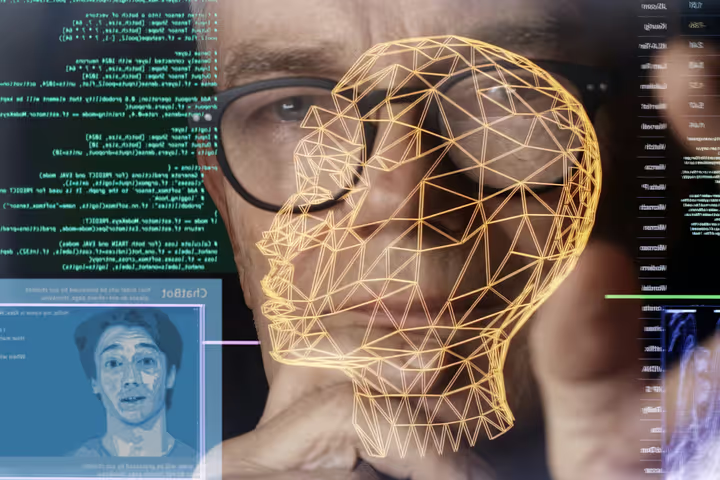

The Algorithmic Arms Race: Are Closed Models Creating an AI Cold War?

Big Tech’s closed models are fueling an AI arms race. Is collaboration dead—and are we heading toward a new Cold War?

The New Superpower Showdown Isn’t About Nukes—It’s About Models

In 20th-century geopolitics, it was nuclear warheads. In the 21st century, it might just be neural nets.

As the world's largest AI companies—OpenAI, Google DeepMind, Meta, Anthropic, and others—battle to build the most powerful models, the stakes are looking eerily familiar: race conditions, secrecy, strategic advantage, and global consequences.

Welcome to the AI Cold War, where the weapons are algorithms, and the battleground is who controls the future of intelligence.

Why Closed Models Are Winning the Race

For all the talk of “open source,” the most advanced AI systems today—GPT-4, Gemini 1.5, Claude 3, and others—are locked behind corporate APIs, training data obscured, weights undisclosed.

Why?

🔒 Security: Prevent misuse, including deepfakes, disinformation, or cyberattacks

💼 Profit: Proprietary models are monetized via subscriptions, APIs, and enterprise deals

🛡️ Power: Knowledge is leverage—whoever builds the smartest AI gains geopolitical clout

But as secrecy deepens, transparency, collaboration, and shared progress are falling behind.

Global Tensions Are Fueling the Fire

It’s not just corporate competition—it’s geopolitical. Governments are backing national AI champions. China, the U.S., the EU, and others are racing to secure data pipelines, compute capacity, and sovereign AI capabilities.

Think of:

- 🇨🇳 China's push for Baidu’s Ernie and SenseTime’s models

- 🇺🇸 U.S. dominance via OpenAI, Anthropic, Meta, NVIDIA

- 🇪🇺 EU’s push for regulated, explainable AI under the AI Act

This fractured development model is driving the field underground, with fewer shared benchmarks and more black-box systems.

The Risks of an Algorithmic Arms Race

The consequences of this closed-model race include:

🚫 Lack of transparency – Making it harder to audit bias, safety, or fairness

🧠 Fragmented knowledge – Slowing down collective understanding of intelligence

📉 Duplicated effort – Wasting resources on secretive siloed breakthroughs

⚠️ Asymmetric risk – Powerful tools in the hands of the few, not the many

And worst of all? The public is left out of the loop, even as these tools shape economies, education, and everyday lives.

Is There a Better Way Forward?

Some are fighting back. Initiatives like OpenWeights, Hugging Face, and EleutherAI champion open science, reproducibility, and responsible model sharing. But they’re underfunded—and outpaced.

For AI to serve humanity, not just shareholders or state actors, we need:

✅ Open standards for safety, ethics, and benchmarks

✅ Cross-border research alliances

✅ Incentives for openness—from funders, governments, and consumers

✅ Shared governance frameworks before power becomes too concentrated

Because collaboration, not competition, built the internet—and it may be the only way to keep AI safe.

Conclusion: Intelligence Without Trust Is Just Power

The AI Cold War isn’t inevitable—but it is accelerating.

If the future of intelligence is locked behind closed doors, we don’t just lose transparency—we risk losing control.

It’s time to ask: Are we building smarter machines… or just a smarter way to repeat old mistakes?