The Default Dilemma: Are Pretrained Biases Deciding Your Fate?

Are pretrained AI models making biased decisions about you? Explore the hidden risks behind the default assumptions of today’s algorithms.

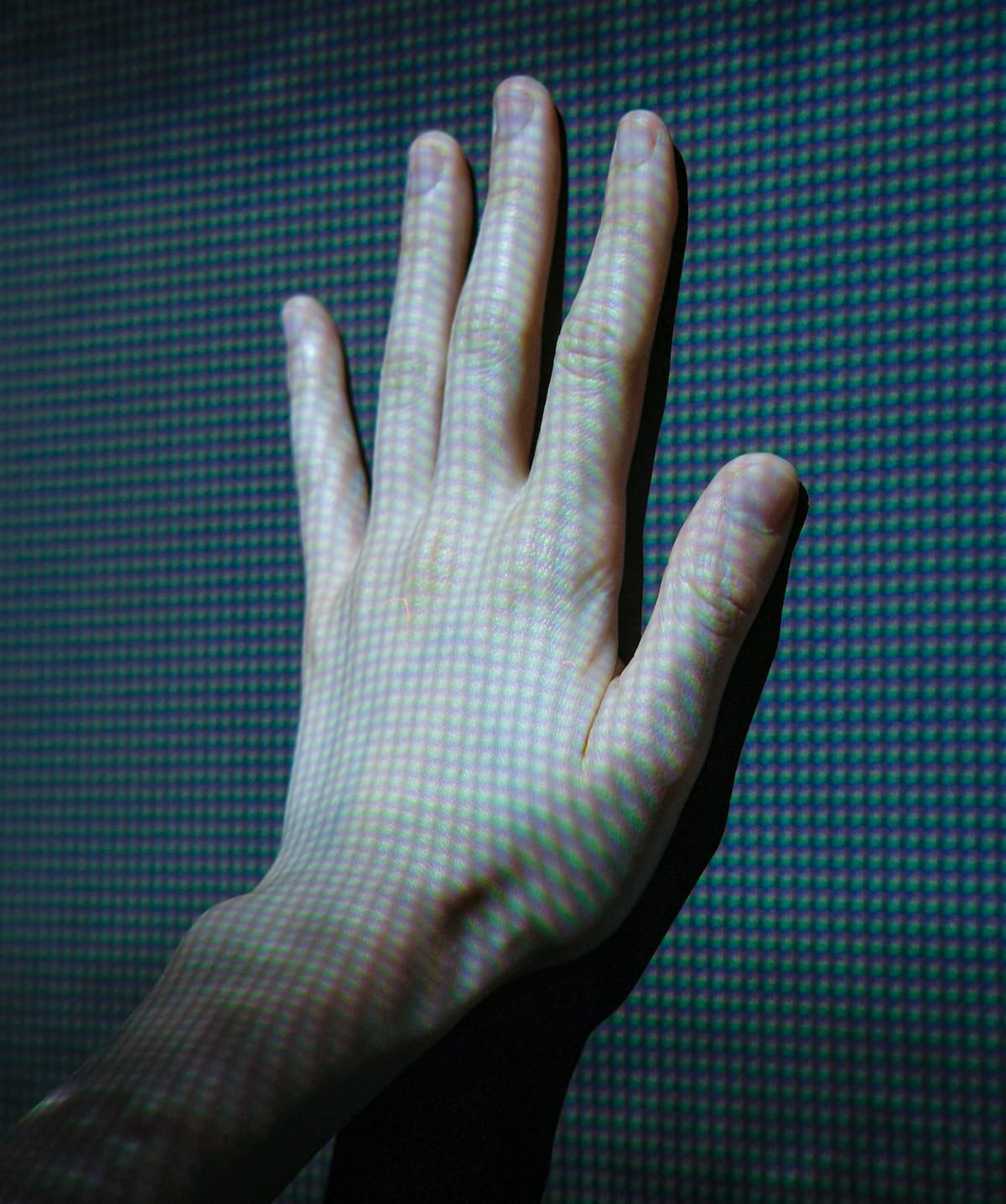

When AI makes decisions about you—without ever knowing you—who’s really in control?

The Invisible Hand of Pretrained AI

Imagine being denied a loan, flagged in a job application, or misidentified in a security system—all because an algorithm trained on someone else’s reality made the call. That’s not science fiction. It’s the very real consequence of pretrained biases baked into artificial intelligence models.

As AI becomes central to everything from hiring and healthcare to policing and finance, one uncomfortable truth is surfacing: your fate might already be influenced—if not decided—by default settings you never opted into.

How Pretrained Biases Enter the System

Most AI models, especially large foundation models, are pretrained on massive datasets scraped from the internet. While this offers broad capabilities, it also embeds societal stereotypes, cultural assumptions, and historical inequalities.

According to a 2022 study from Stanford’s Center for Research on Foundation Models, even top-performing models like GPT and CLIP exhibit racial, gender, and socioeconomic biases—because they reflect the biases present in their training data.

Key sources of inherited bias include:

- Imbalanced datasets (e.g., more data from Western, English-speaking users)

- Outdated norms (e.g., associating certain job titles with specific genders)

- Toxic content (e.g., forums, comments, and unfiltered web pages)

The Real-World Impact of Default Assumptions

Biases aren’t just theoretical—they impact lives.

- In hiring: Resume-screening algorithms have been shown to favor male-coded language.

- In healthcare: Risk prediction models have underestimated the needs of Black patients.

- In justice: Predictive policing tools often disproportionately target minority neighborhoods.

What makes this worse is opacity. Most users—and even organizations—don’t fully understand how decisions are made, or what assumptions the AI started with.

Why It’s Hard to Spot (and Fix)

Pretrained biases are like invisible ink: hard to detect unless you're looking for them, and often missed until they cause harm.

Several challenges persist:

- Black-box models: Developers can't always explain specific outputs.

- Transfer learning risks: When biased base models are fine-tuned for specific tasks, they often carry their inherited bias into new domains.

- Data inertia: Cleaning or curating better data is expensive, and model retraining is technically complex.

As AI moves from tool to decision-maker, ignoring these defaults isn’t an option.

Toward Transparent and Fair AI

Thankfully, a growing movement is pushing for accountability:

- Algorithmic audits (e.g., by AI Now Institute and Hugging Face)

- Bias benchmarking tools like IBM’s AI Fairness 360

- Regulatory frameworks emerging from the EU AI Act and the White House’s AI Bill of Rights

Forward-thinking companies are also rethinking their stacks—investing in explainability tools, adopting diverse training data, and implementing “bias bounties” to uncover hidden issues.

Conclusion: Who Controls the Defaults?

In a world increasingly shaped by AI, defaults matter. And if we don’t interrogate them, they’ll keep silently deciding who gets hired, who gets heard, and who gets left behind.

The Default Dilemma reminds us that behind every algorithmic outcome is a set of human choices—some deliberate, others just inherited. To build a future where AI is fair, we must question not just the answers AI gives—but the assumptions it starts with.