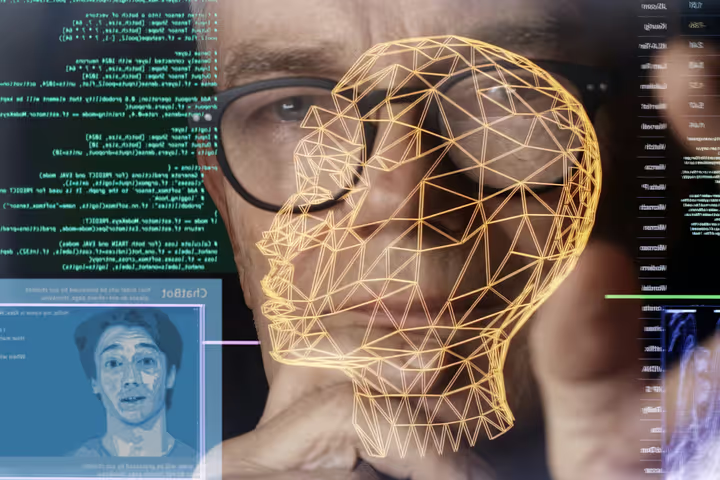

The Global AI Rulebook: Inside the Race to Regulate Intelligence

AI laws are coming fast—but unevenly. Explore how the EU, US, and China are shaping the global AI rulebook and what’s at stake.

AI is moving fast. Lawmakers are scrambling to keep up.

From Brussels to Beijing to Washington, the race is on to write the global AI rulebook—before unchecked intelligence rewrites the rules for us.

With generative AI reshaping economies and influencing elections, governments are no longer asking if AI should be regulated, but how—and who gets to decide.

Why Regulating AI Is So Complex

AI isn’t like past technologies. It’s:

- Opaque: Models often make decisions no one fully understands

- Borderless: An algorithm trained in one country impacts users worldwide

- Rapidly evolving: Regulations can become outdated before they’re enacted

As a result, nations are struggling to balance innovation and oversight—with wildly different approaches.

Europe Leads with the AI Act

The EU AI Act, passed in 2024, is the world’s first comprehensive framework.

It classifies AI systems by risk—banning some, tightly regulating others. Key rules:

- High-risk AI (like hiring tools or credit scoring) must meet transparency, safety, and accountability standards

- Prohibited uses include social scoring and real-time facial recognition in public

The EU’s stance is clear: protect citizens first, innovate second.

The U.S. Focuses on Voluntary Standards

The U.S. is taking a lighter-touch approach—for now.

Biden’s 2023 AI Executive Order sets broad guardrails, focusing on:

- Safety testing

- Fairness audits

- Government use of AI

Rather than sweeping laws, the U.S. relies on industry self-regulation, prompting criticism that it's moving too slowly to rein in big tech.

China: Control Meets Acceleration

China’s model is unique: regulate AI to preserve state authority, not just public safety.

Its laws mandate:

- Real-name registration for AI content

- Censorship of generative models

- Strict oversight of “deep synthesis” technologies

While some see this as draconian, China has moved faster than most to implement enforceable AI controls—especially in public-facing applications.

A Fragmented Future?

With the world’s top powers charting separate paths, we may face an AI governance gap:

- Companies may “jurisdiction shop” to train models where laws are lax

- A lack of global standards could fuel AI inequality and cross-border risk

- Differing definitions of harm, privacy, and accountability will make global collaboration harder

The danger? An AI Cold War, where ideological divides drive how intelligence is regulated.

Conclusion: The Clock Is Ticking

AI regulation is no longer theoretical. The stakes are real—for democracy, economics, and human rights.

The world needs not just national policies, but global coordination. Otherwise, we risk letting a handful of companies or countries shape intelligence for the rest of us.

The rulebook is being written. The only question is: who holds the pen?