The Overfit Oracle: When Models Memorize Instead of Understanding

Discover how overfitting turns AI models into brilliant mimics—smart on the surface, flawed underneath.

In the race to build ever-larger AI models, performance often masks a deeper issue: many systems excel at memorizing patterns but falter when asked to truly understand them. This phenomenon, known as overfitting, is turning some of our smartest models into little more than statistical echo chambers. Welcome to The Overfit Oracle—where brilliance is measured in repetition, not reasoning.

What Is Overfitting, Really?

In machine learning, overfitting happens when a model performs exceptionally well on training data—but poorly on new, unseen inputs. It means the system has “learned” too much, memorizing data instead of extracting generalizable insights.

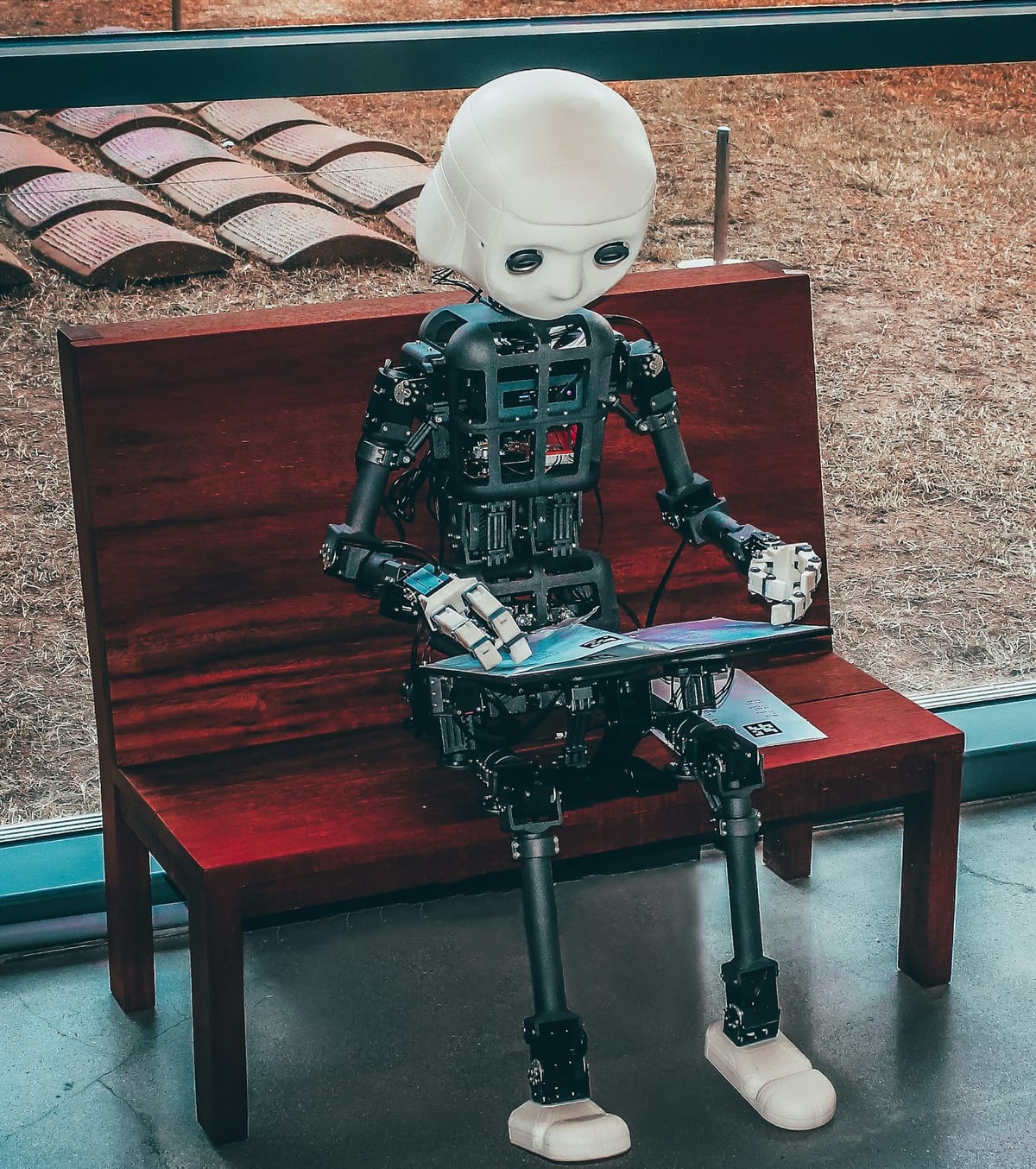

Think of a student who aces a practice test by memorizing answers, then fails the real exam because they didn’t grasp the underlying concepts.

In large language models (LLMs), this shows up as:

- Repetition of training examples verbatim

- Struggles with out-of-context reasoning

- Inability to apply logic beyond surface patterns

A Stanford 2024 paper revealed that some top-tier LLMs directly regurgitated entire chunks of copyrighted text, suggesting not generalization—but exact recall.

Bigger Isn’t Always Smarter

Modern AI models are trained on terabytes of text—books, websites, forums, code repositories. With enough capacity, they can memorize an enormous amount of that data. But sheer size doesn’t guarantee true intelligence.

As models scale, the line between learning and copying gets blurry.

The result? Models that sound fluent and fact-rich… until you ask them to reason, adapt, or handle ambiguity. That’s when the oracle starts to crumble.

Why It Matters: From Accuracy to Trust

Overfitted models can create major risks in real-world applications:

- In healthcare, regurgitating outdated medical guidelines could harm patients.

- In law, parroting biased legal rulings may reinforce systemic injustice.

- In finance, rigid pattern recognition could miss novel risks or trends.

Worse, users often can’t tell the difference. If an AI sounds confident, it’s easy to mistake recall for reasoning—and build dangerous trust in outputs that were never truly “understood.”

Toward Models That Generalize, Not Just Memorize

To fight overfitting, AI researchers are experimenting with:

- More diverse and dynamic training datasets

- Techniques like dropout, data augmentation, and adversarial training

- Better benchmarking tools that test true reasoning, not just pattern-matching

- Architectural shifts toward models that integrate logic, causality, or symbolic reasoning

OpenAI’s recent explorations into multimodal reasoning and "chain-of-thought" prompting are early steps toward more resilient, thoughtful models.

Conclusion: From Oracle to Student

The Overfit Oracle is a cautionary tale. A model that remembers everything—but understands nothing—can dazzle in a demo and fail in the field. In the next era of AI, we must build systems that don't just sound smart, but think smart.

Because intelligence isn’t in the answers—it’s in how you find them.